Foundations of Artificial Intelligence

Philosophical Underpinnings

On Philosophy

Can formal rules be used to draw valid conclusions?

How does the mind arise from a physical brain?

Where does knowledge come from?

How does knowledge lead to action?

Aristotle (384–322 BC)

Syllogisms: correct premises -> correct conclusions

We could regard this as mechanical thought.

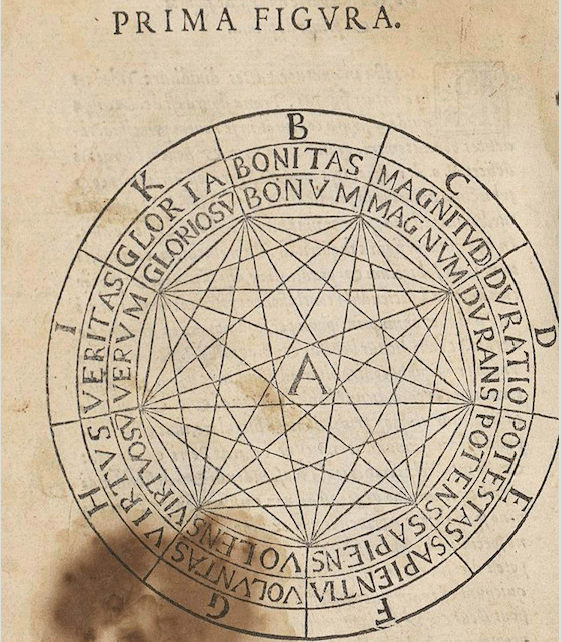

Ramon Llull (1232–1315)

“First Figure. Ars brevis”

Da Vinci (1452–1519)

Blaise Pascal (1623–1662)

The Pascaline

Gottfried Wilhelm Leibniz (1646–1716)

Stepped Reckoner (1694)

… it is beneath the dignity of excellent men to waste their time in calculation when any peasant could do the work just as accurately with the aid of a machine.

— Gottfried Leibniz

Thomas Hobbes (1588-1679)

The “Artificial Animal” in Leviathan

“For what is the heart but a spring; and the nerves, but so many strings; and the joints, but so many wheels.”

“For ‘reason’ … is nothing but ‘reckoning,’ that is adding and subtracting.”

Rene Decartes (1596-1650)

Let’s be clear, the statements:

“The mind operates according to rules and those rules can also be emulated by a machine”

and

“The mind is a physical machine”

are different

Decartes introduced dualism, this is in opposition to materialism (and idealism, technically).

Empiricism (1561…)

Francis Bacon & John Locke: “Nothing is in the understanding, which was not first in the senses”

That is, the only things that are real, are things that can be observed.

Hume’s Induction: we get general rules via repeated exposures to associations between objects.

Knowledge acc. Locke

The acts of the mind, wherein it exerts its power over simple ideas, are chiefly these three: 1. Combining several simple ideas into one compound one, and thus all complex ideas are made. 2. The second is bringing two ideas, whether simple or complex, together, and setting them by one another without uniting them into one, by which it gets all its ideas of relations. 3. The third is separating them from all other ideas that accompany them in their real existence: this is called abstraction, and thus all its general ideas are made.

John Locke, An Essay Concerning Human Understanding (1690)

Logical Positivism (1920s…)

Following from Ludwig Wittgenstein and Bertrand Russel, the Vienna circle developed Logical Positivism.

Put briefly, under Logical Positivism all knowledge is expressible as logical statements connected to observations.

Rudolf Carnap and Carl Hempel (1891–1970 and 1905–1997)

Confirmation theory: acquire knowledge from experience and assign a degree of belief to logical sentences according to observations.

Note that this regards reason as a computational process.

Regarding Action

Acc. Aristotle, actions arise from the connection between goals and knowledge of an action’s outcome.

“We deliberate not about ends, but about means.” (from Nicomachean Ethics)

Therefore Aristotle proposes an algorithm which searches for means to achieve a stated goal (later implemented as the GPS).

What of Complex Tasks?

What if we have different ways to achieve a goal?

How do we choose our route?

What if we can’t be certain?

Antoine Arnauld (1662): Rational decisions in gambling?

Daniel Bernoulli (1738): Utility?

Jeremy Bentham (1823) and John Stuart Mill (1863): Utilitarianism (Consequentialism)

Immanuel Kant (1875): deontological ethics, rules-based ethics.

Mathematical Underpinnings

What are the formal rules to draw valid conclusions?

What can be computed?

How do we reason with uncertain information?

Philosophy was required for the foundation, mathematics was required for the formalization.

Formal Logic

“Formal Logic” has roots in philosophy from Greece, India, and China.

George Boole (1865–1864) developed propositional logic, which Gottlob Frege (1848–1925) expanded with objects and relations, which later inspired Godel and Turing.

Probability

“Generalizing logic to situations with uncertain information”

Gerolamo Cardano (1501–1576): Gambling outcomes

Blaise Pascal (1623–1662): Deciding payout from an unfinished gambling game with average payouts

“How we finish unfinished or incomplete measurements”

Thomas Bayes (1702–1761): How do we update probabilities with new information?

Statistics was formalized later after more data became available (1662 London census [John Graunt])

Ronald Fisher (1890–1962) claimed he was unable to do his work (statistics) without calculator.

Computation

Euclid: GCD, perhaps earliest nontrivial example

Muhammad ibn Musa al-Khwarizmi (~800): also Arabic numerals and algebra

Procedures later inspired Boole, et. al. to formalize math as logic

Limitations

Kurt Godel (1906–1976): Logic of Frege and Russel could be used to formalize a procedure to prove any true statement (but could not explain natural numbers).

Incompleteness theorem: Any theory which is powerful enough to explain natural numbers contains statements which must be true, but cannot be proved under the theory.

Principia Mathematica :(

This also means that some functions on the integers cannot be represented via algorithm…

Alan Turing(1912–1954)

What is computable?

Church-Turing thesis discusses general computability with the Turing Machine (1936)

There are things that are not computable… (Halting Problem)

More relevant than computability is tractibility, that is “can be solved in a reasonable amount of time”

NP-completeness: later formalization of computational complexity and tractability

History of Artificial Intelligence

The inception of artificial intelligence (1943–1956)

Warren McCulloch and Walter Pitts (1943): physiology of neurons + formal analysis of propositional logic + Turing’s theory of computation = “Neural Networks”

“Any computable function could be computed by some network of neurons” and “logical connectives” could be simple network structures (the first working transistor was created at Bell Labs in 1947)

They also suggested “machine learning”. Later defined by Donald Hebb (1949).

Marvin Minsky (1927–2016) and Dean Edmonds built the SNARK (Stochastic Neural Analog Reinforcement Calculator) in 1950.

Turing was lecturing as early as 1947… (Turing test, machine learning, genetic algorithms, reinforcement learning).

“Computers should learn rather than intelligence programmed”

“Maybe we shouldn’t do this…”

Dartmouth

John McCarthy et. al. organizes 2-month workshop:

We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.

From this Allen Newell and Herbert Simon (Carnegie Tech) produced Logic Theorist (LT).

Early enthusiasm, great expectations (1952–1969)

This period was characterized by “Can AI do X? Let’s find out!”

After LT, Newell and Simon built the General Problem Solver (GPS)

The physical symbol system hypothesis was formulated after GPS, and states that “a physical symbol system has the necessary and sufficient means for general intelligent action.”

Arthur Samuel’s AI checkers program used reinforcement learning to meet and exceed his own skill

In 1958 McCarthy defined Lisp which would become the dominant AI language for the next 30 years.

Later his Advice Taker, would demonstrate the ability to consume and use knowledge without being reprogrammed.

At MIT, Minksy supervised students working on limited scope projects, “microworlds”

Closed-form calculus integration

Geometric analogy problems

Algebra story problems “STUDENT”

“blocks world”

A dose of reality (1966–1973)

It is not my aim to surprise or shock you—but the simplest way I can summarize is to say that there are now in the world machines that think, that learn and that create. Moreover, their ability to do these things is going to increase rapidly until—in a visible future—the range of problems they can handle will be coextensive with the range to which the human mind has been applied.

– Herbert Simon (1957)

Informed introspection (as opposed to actual analysis)

Ignorance of intractability

The fact that a program can find a solution in principle does not mean that the program contains any of the mechanisms needed to find it in practice.

Genetic programming suffered a similar fate

Expert Systems (1969-1986)

What if, instead of general searches through basic steps to find a solution (weak methods), we depend on domain-specific knowledge?

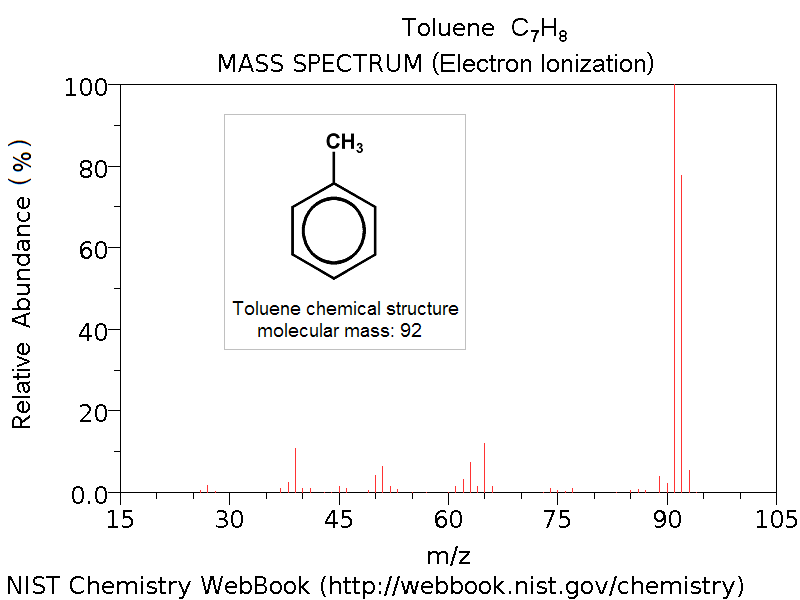

DENDRAL (1969) took this approach using molecular formula and mass spectrometer data to predict molecular structure.

This takes a significant amount of collaboration with domain experts

MYCIN

Blood infection diagnosis system

~450 rules, derived from experts not principals

MYCIN operated about as well as the experts, sometimes better than junior doctors!

R1

First successful commercial (as opposed to research) expert system was R1 from DEC (1982).

For new computer system orders!

In the early 80s, Japanese government invested over $1.3 B, in prolog systems. US had similar program.

By the end of the 80s, nearly every corporation had an AI group, either using or investigating expert systems.

Winter

At the end of the 80s came the “AI Winter”, companies failed to relieved on increasingly extravagant promises.

Expert systems are expensive to build and maintain

Don’t (historically) deal with uncertainty well

Didn’t (historically) learn from experience

Return of Neural Networks

“Back-propagation” was reinvented in the 80s four by four separate groups (retracing the 60s)

This resurgence of neural networks (“connectionist”) competed by symbolic and logic models.

Neural networks capture messy concepts better than more rigid systems.

Big Data (2001–now)

Neural networks are extremely data hungry and require lots of information to properly train.

Neural networks often seem useless when their data is small, when data is big then they can perform remarkably well.

Deep learning (2011–now)

Multi-layer neural networks can overcome much of the early weaknesses of neural networks.

Deep neural networks are even more data hungry which has limited their use until recently.

Deep neural networks are extremely computationally expensive, which has limited their use until recently.

But they have allowed the creation of other systems like GPT-3/4 (ChatGPT)